Sound Phase

Sound is temporal, social, invisible, and physical. In architecture interaction design using sound brings together design, visual communication, and physical environments. Sound is a thing, an action, a mediator, and a feeling.

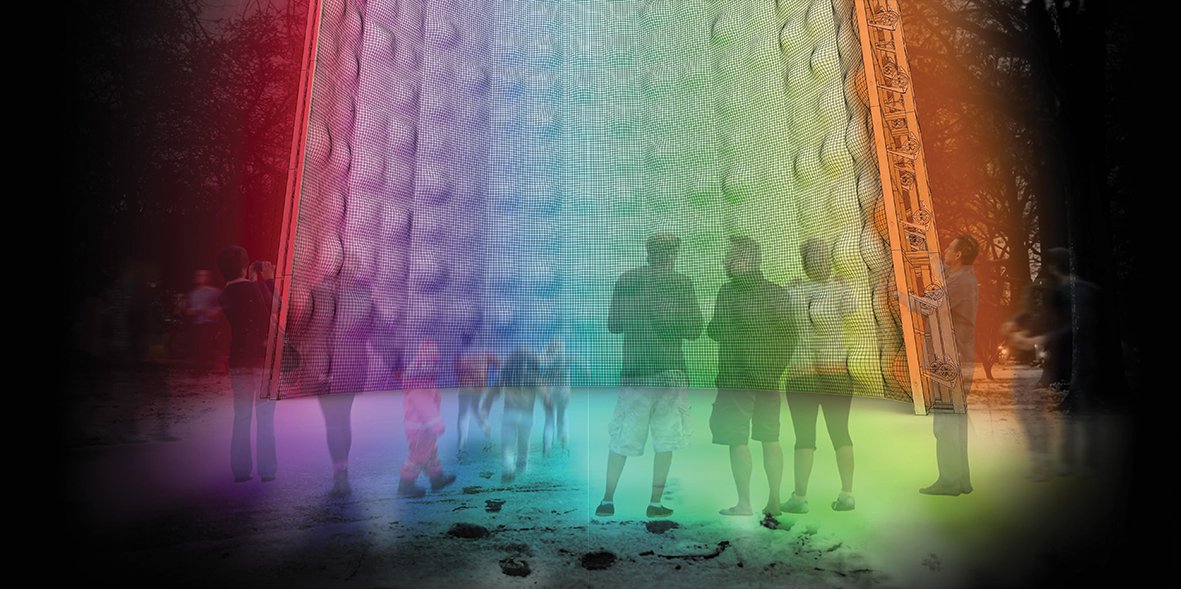

Light is the medium by which we are able to see and through its intensity, the way it is distributed. In architecture lighting design is essential for building the visual environment, creating perceptual conditions,promoting a feeling of well-being and communication visually with the users.

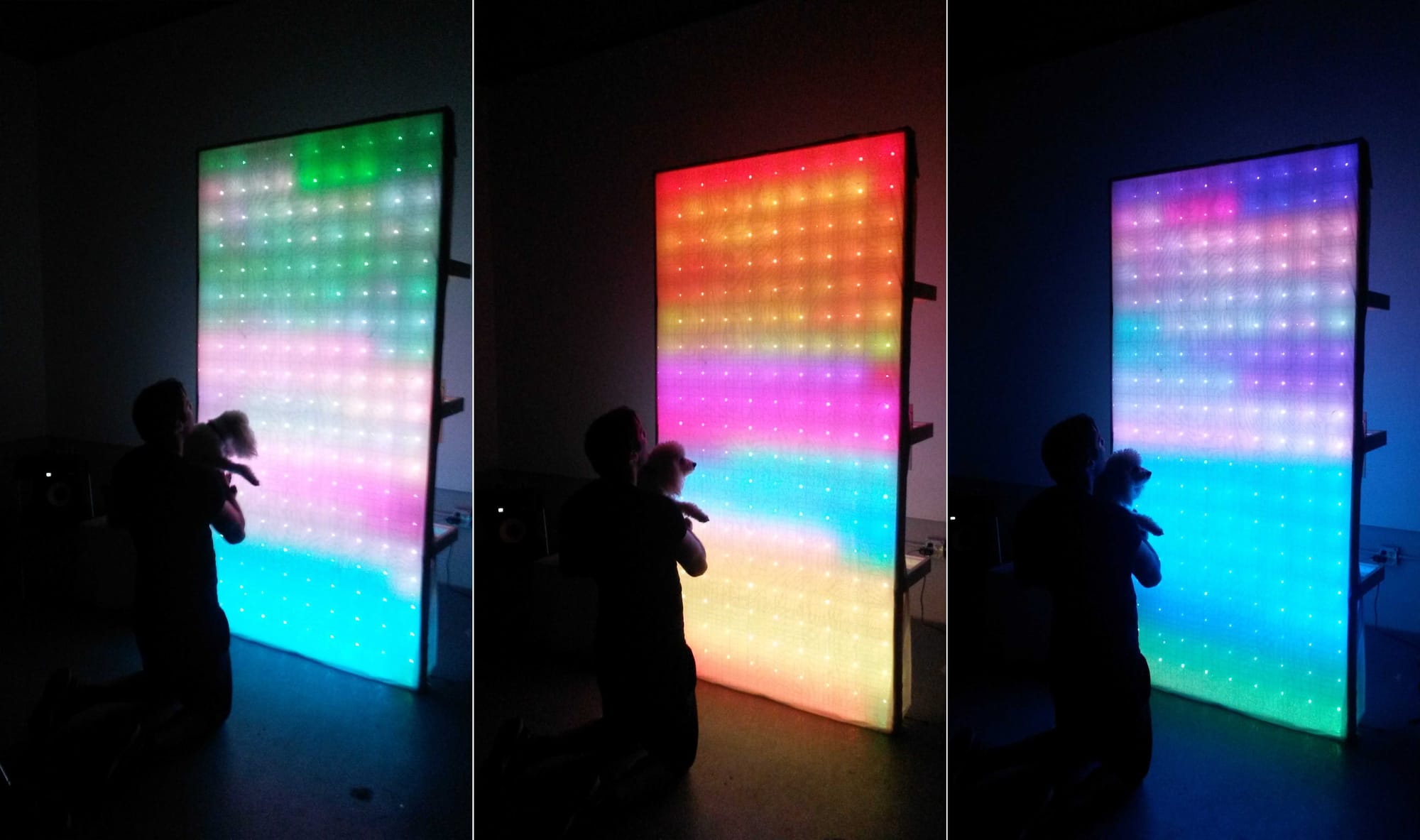

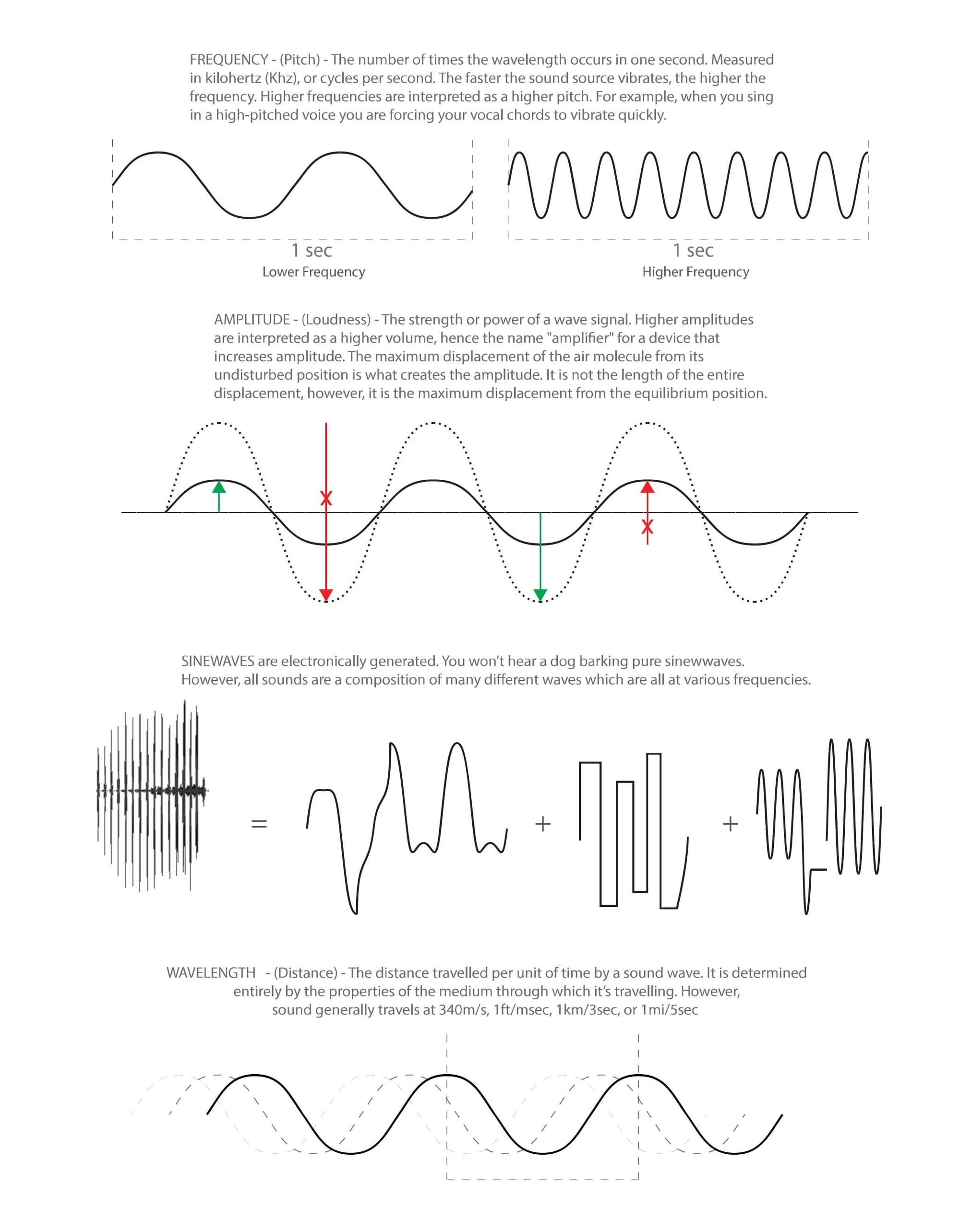

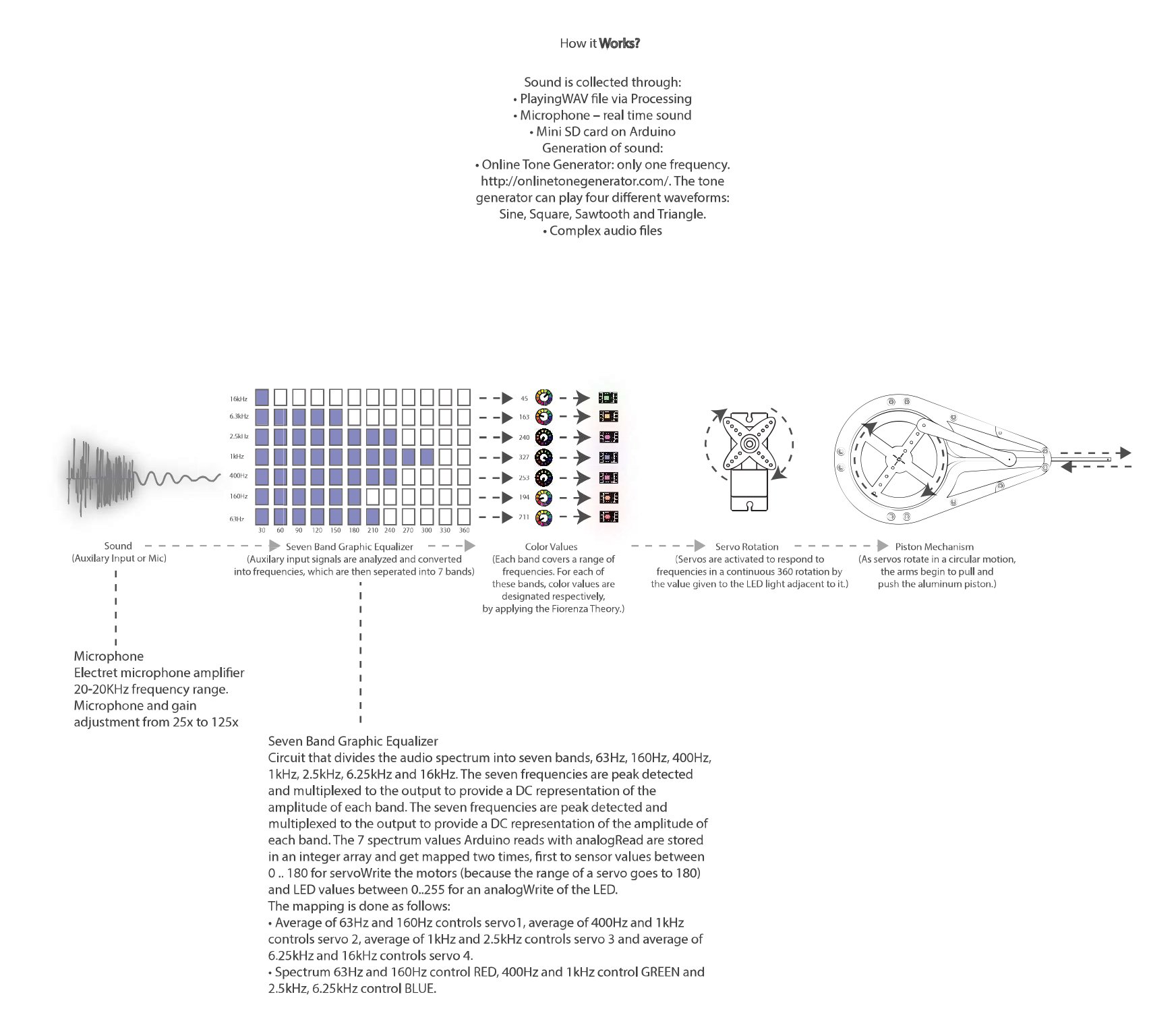

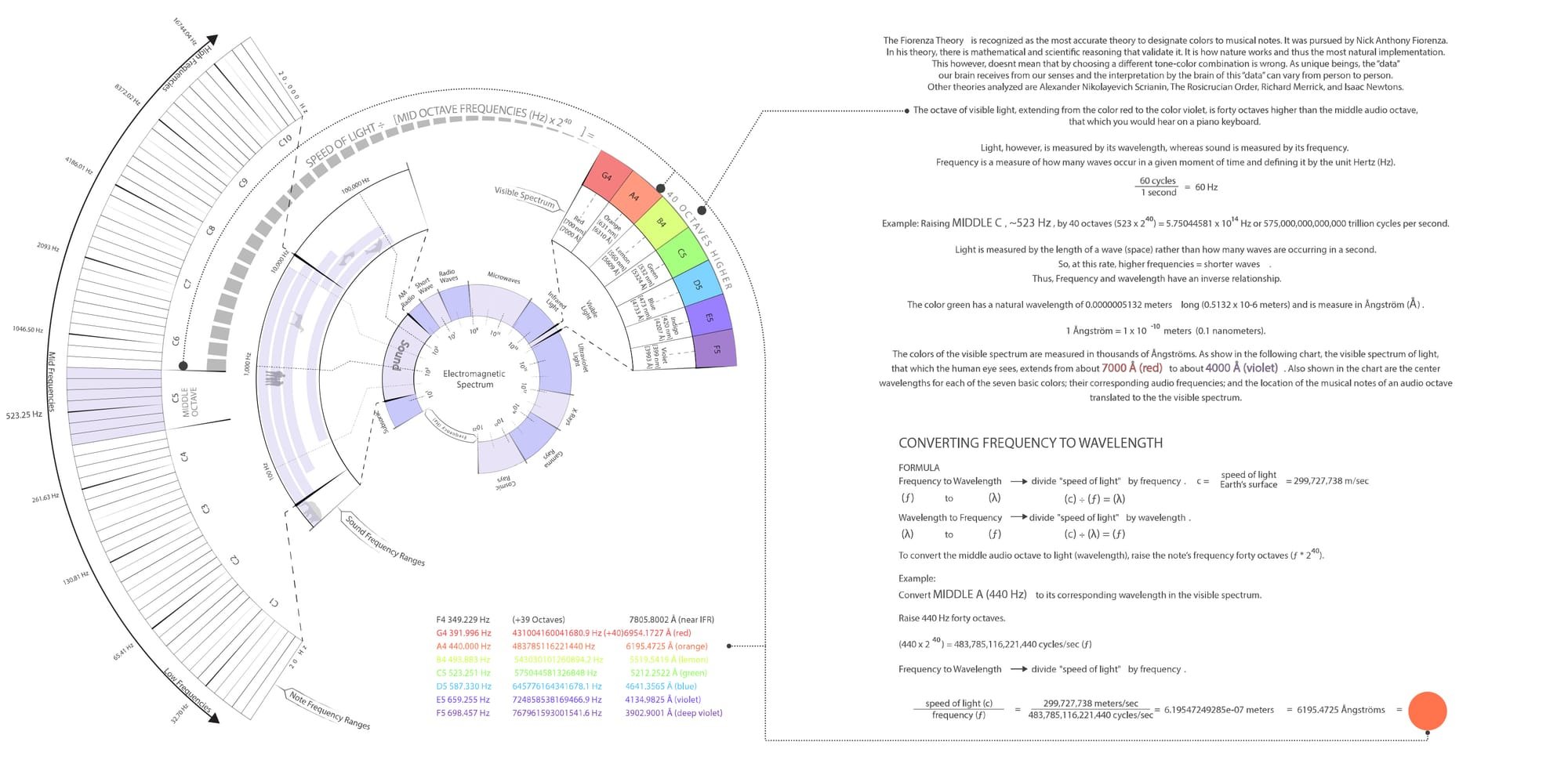

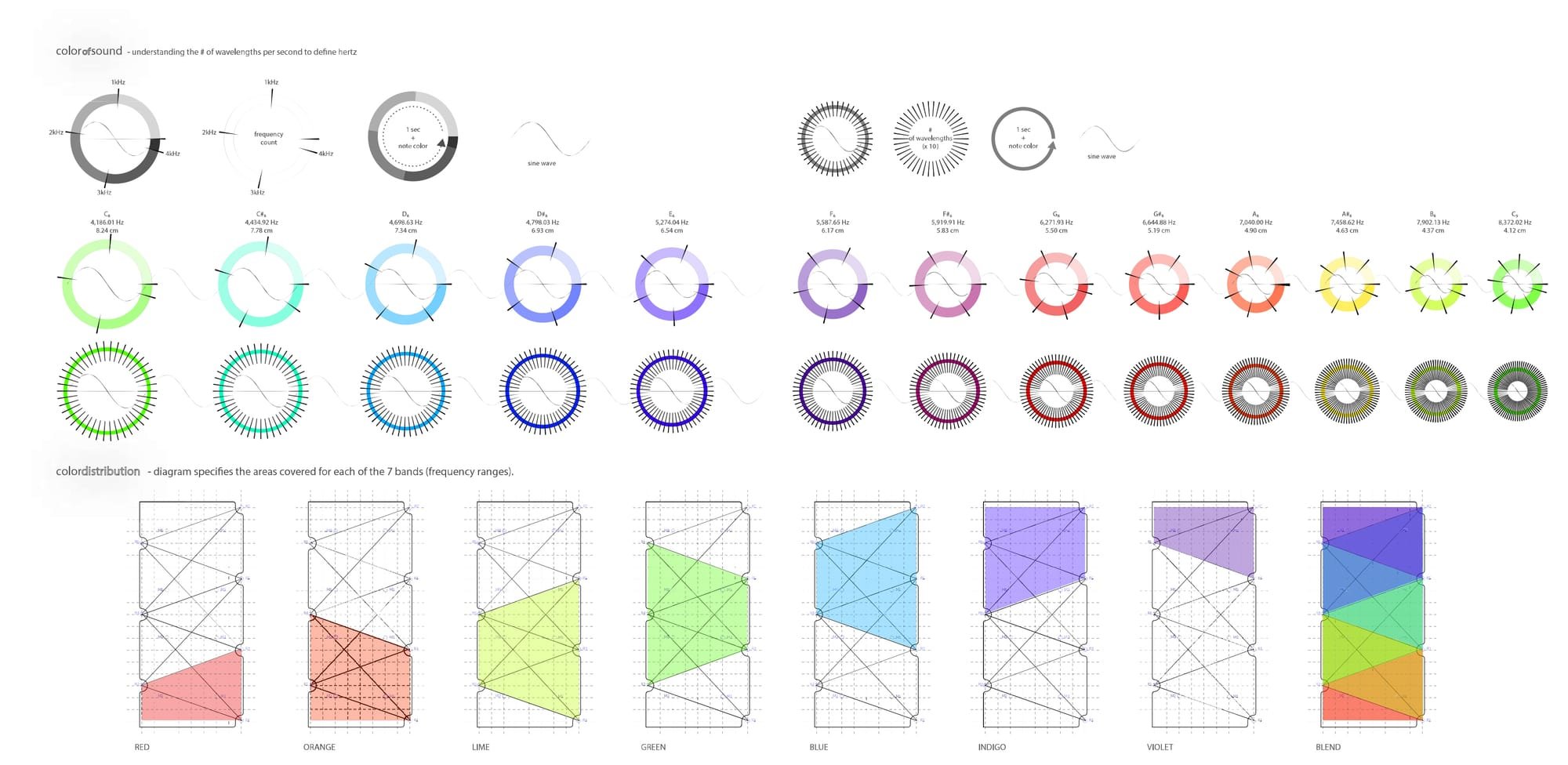

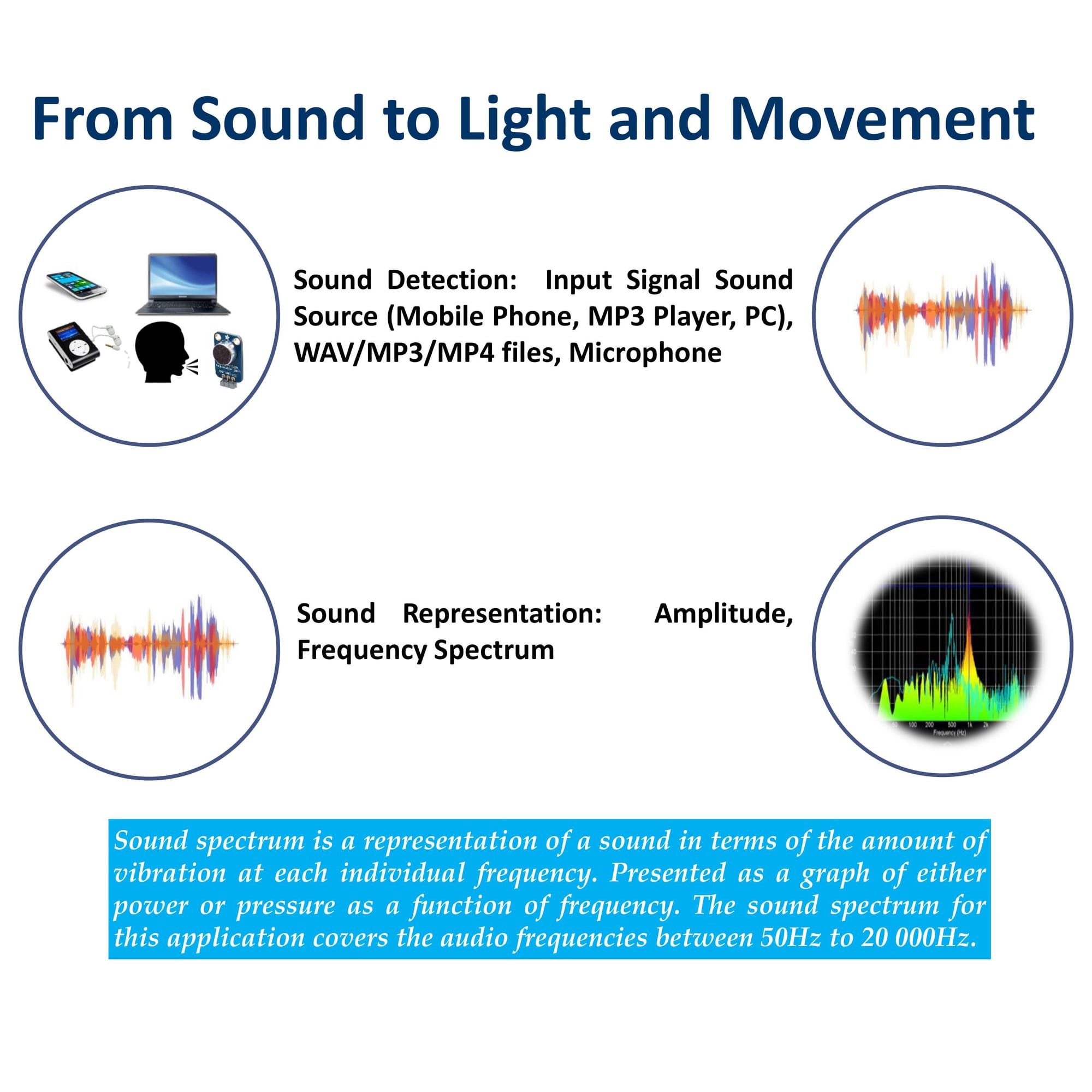

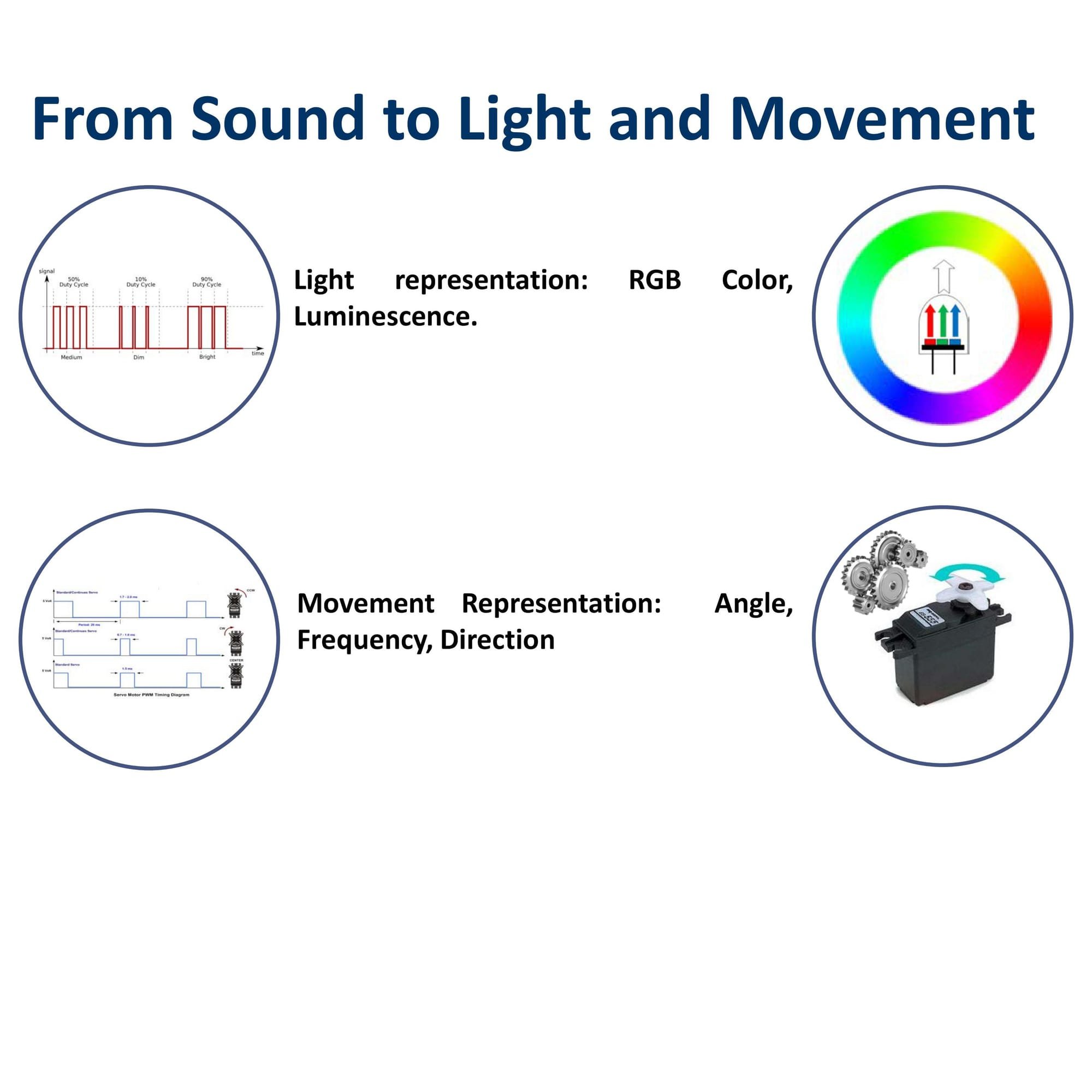

Music visualization is realized by generating animated movements and combination of colors and light intensity based on sound (voice, tones, piece of music). The changes in the sound’d loudness, amplitude and frequency spectrum are properties used as input to the visualization.

In the following series of experiments, we explored various ways to visualize Music through light and movement.

Properties of Sound

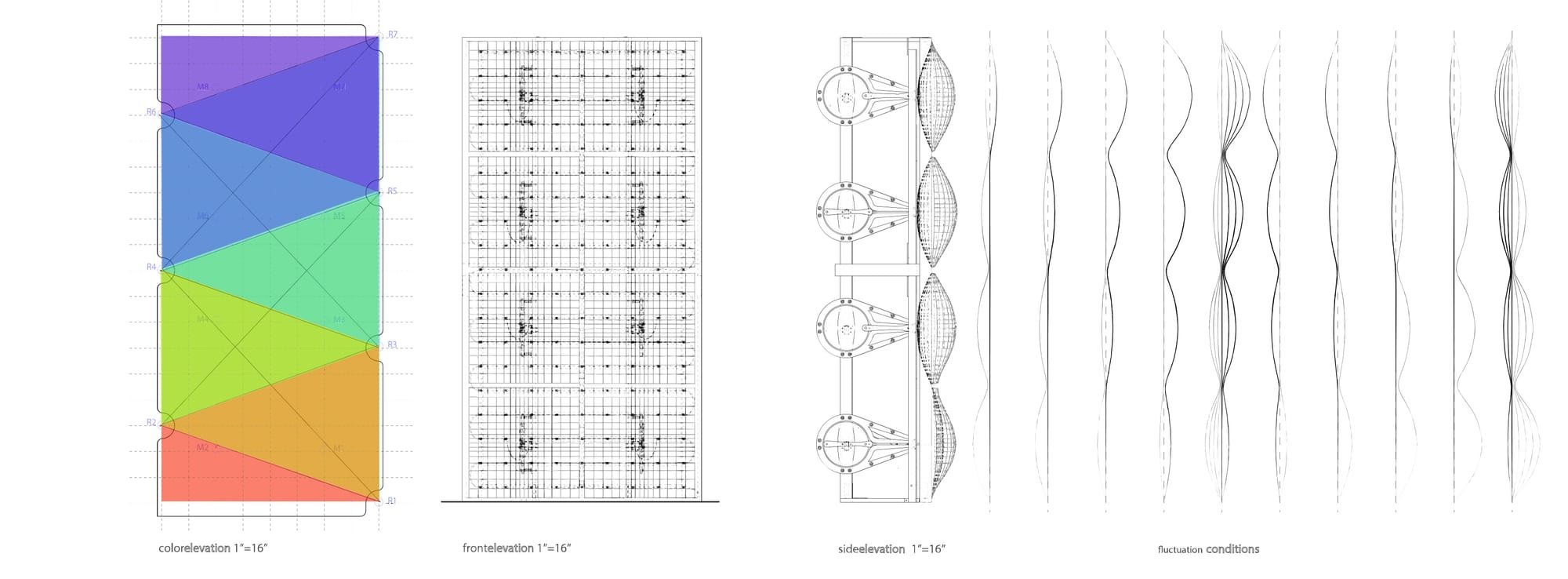

Prototyping a case study

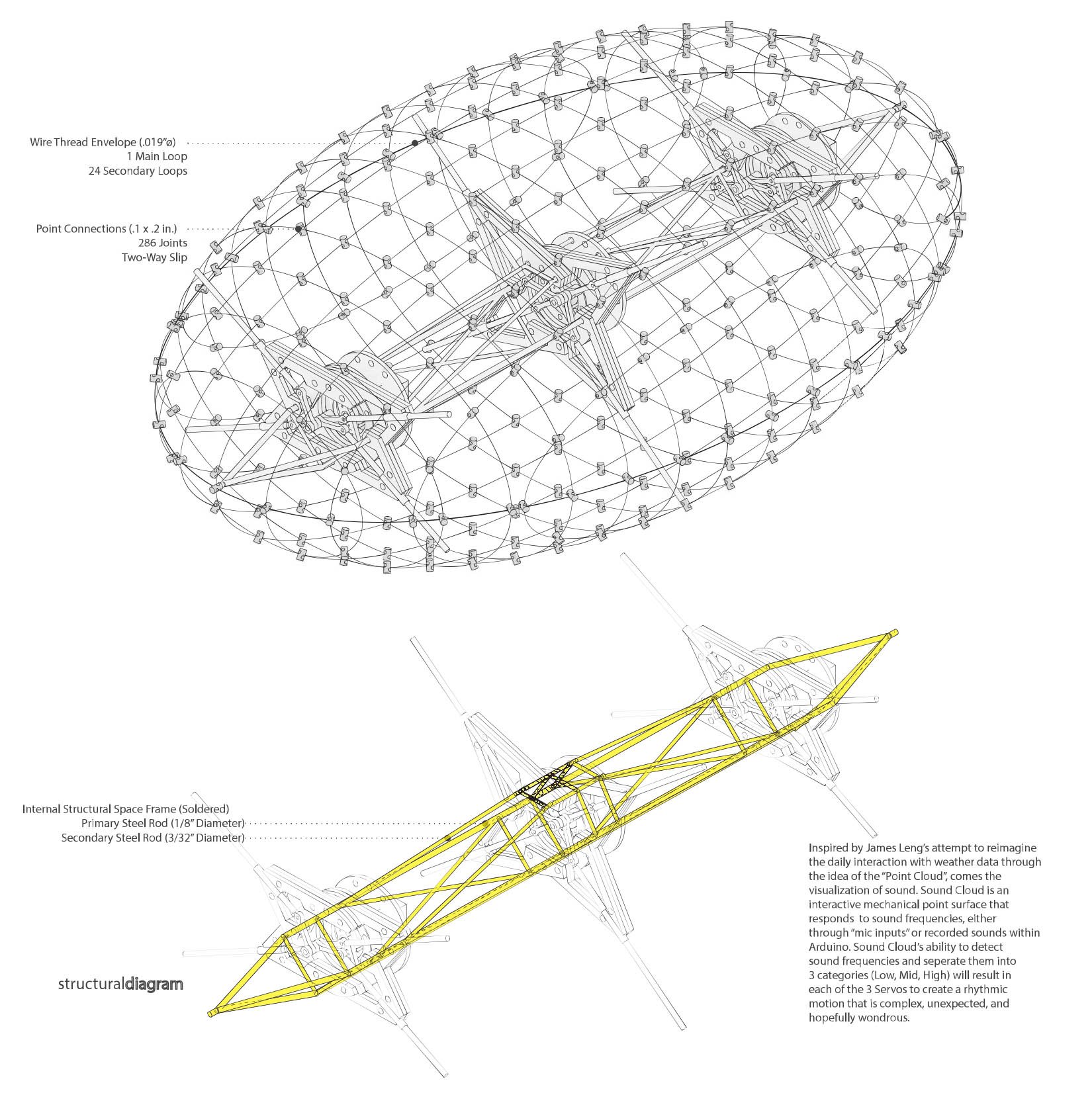

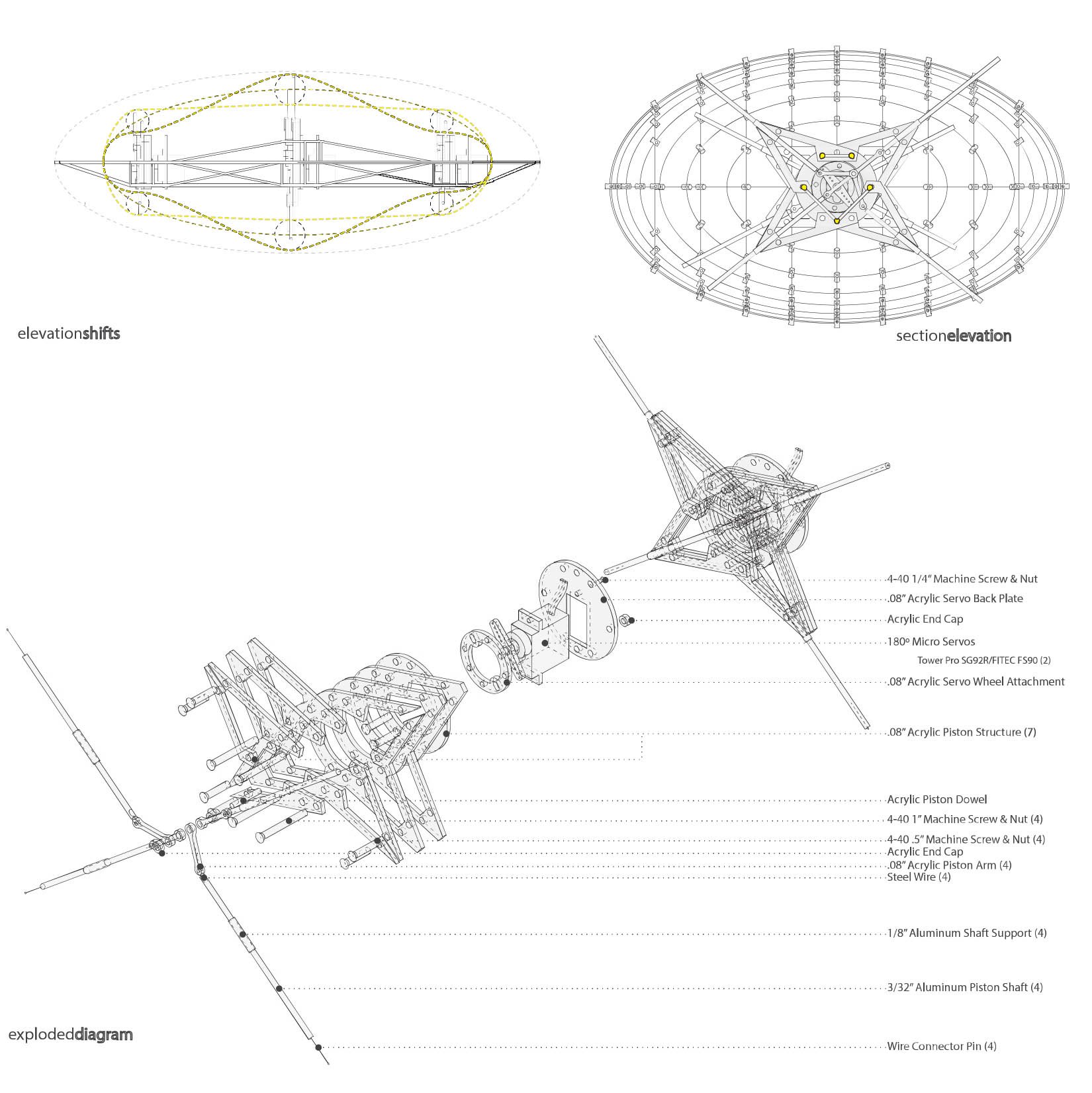

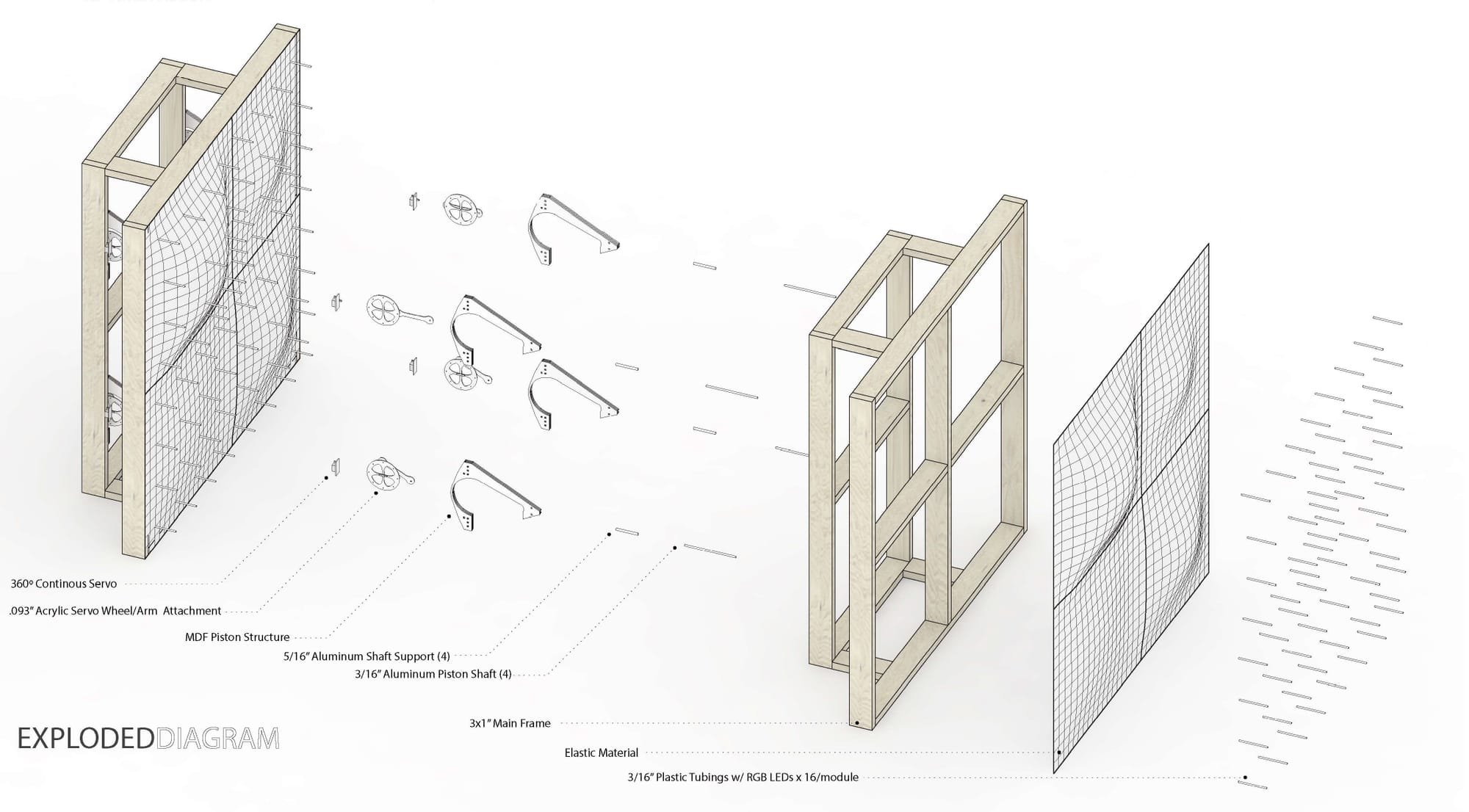

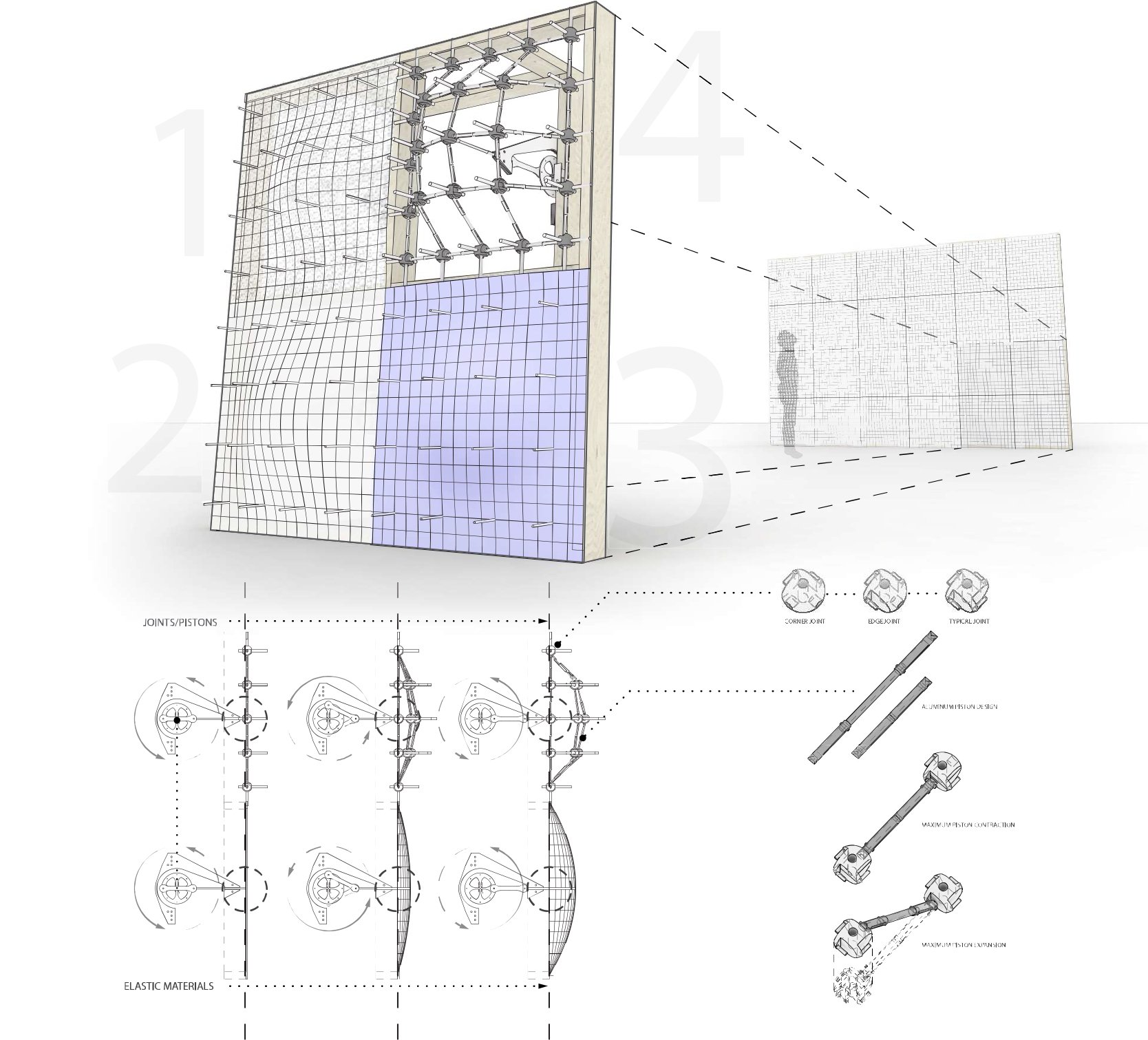

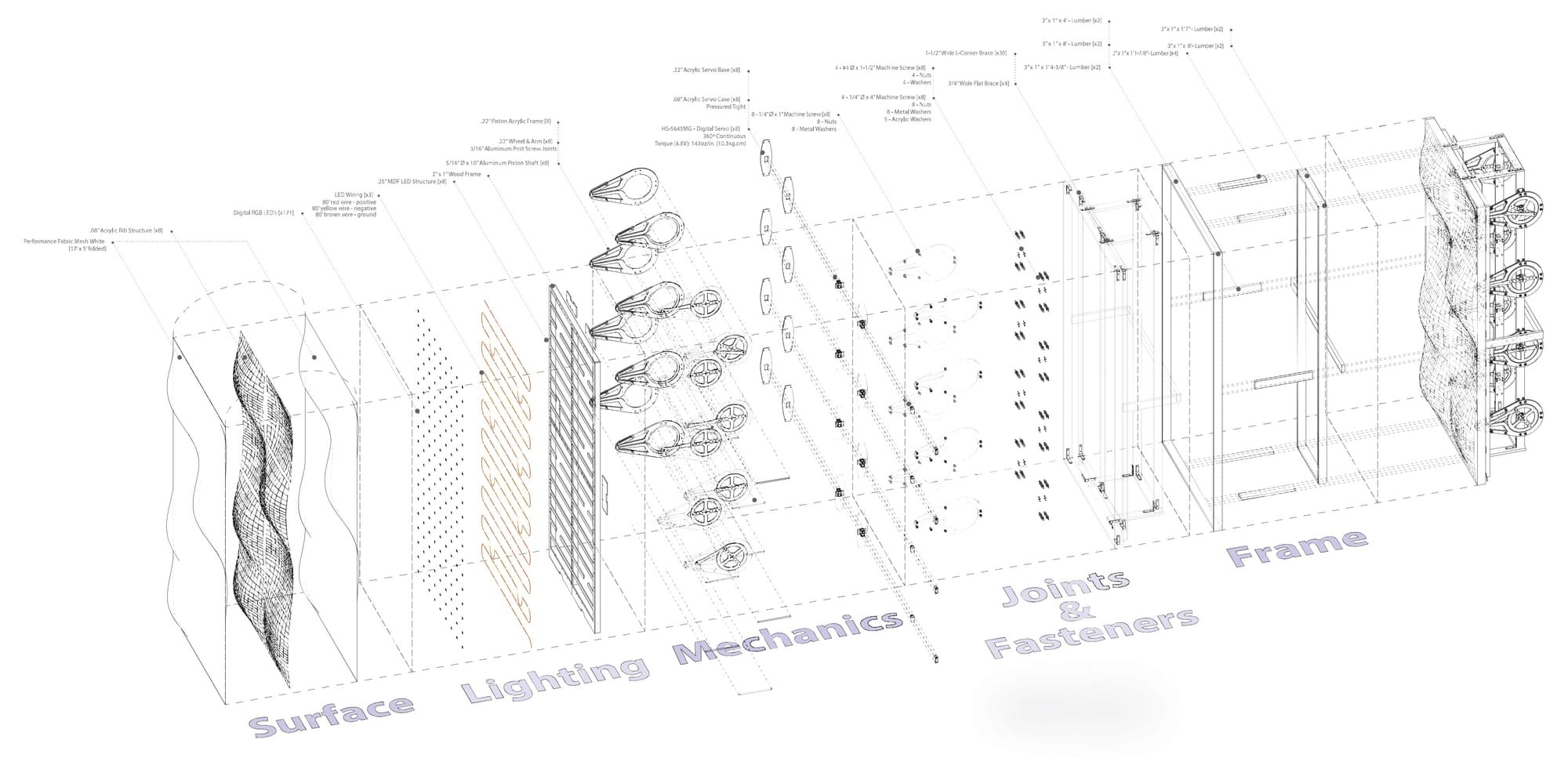

Inspired by James Leng’s attempt to reimagine the daily interaction with weather data through the idea of the “Point Cloud”, comes the visualization of sound. Sound Cloud is an interactive mechanical point surface that responds to sound frequencies, either through “mic inputs” or recorded sounds within Arduino. Sound Cloud’s ability to detect sound frequencies and seperate them into 3 categories (Low, Mid, High) will result in each of the 3 Servos to create a rhythmic motion that is complex, unexpected, and wondrous.

Proposal

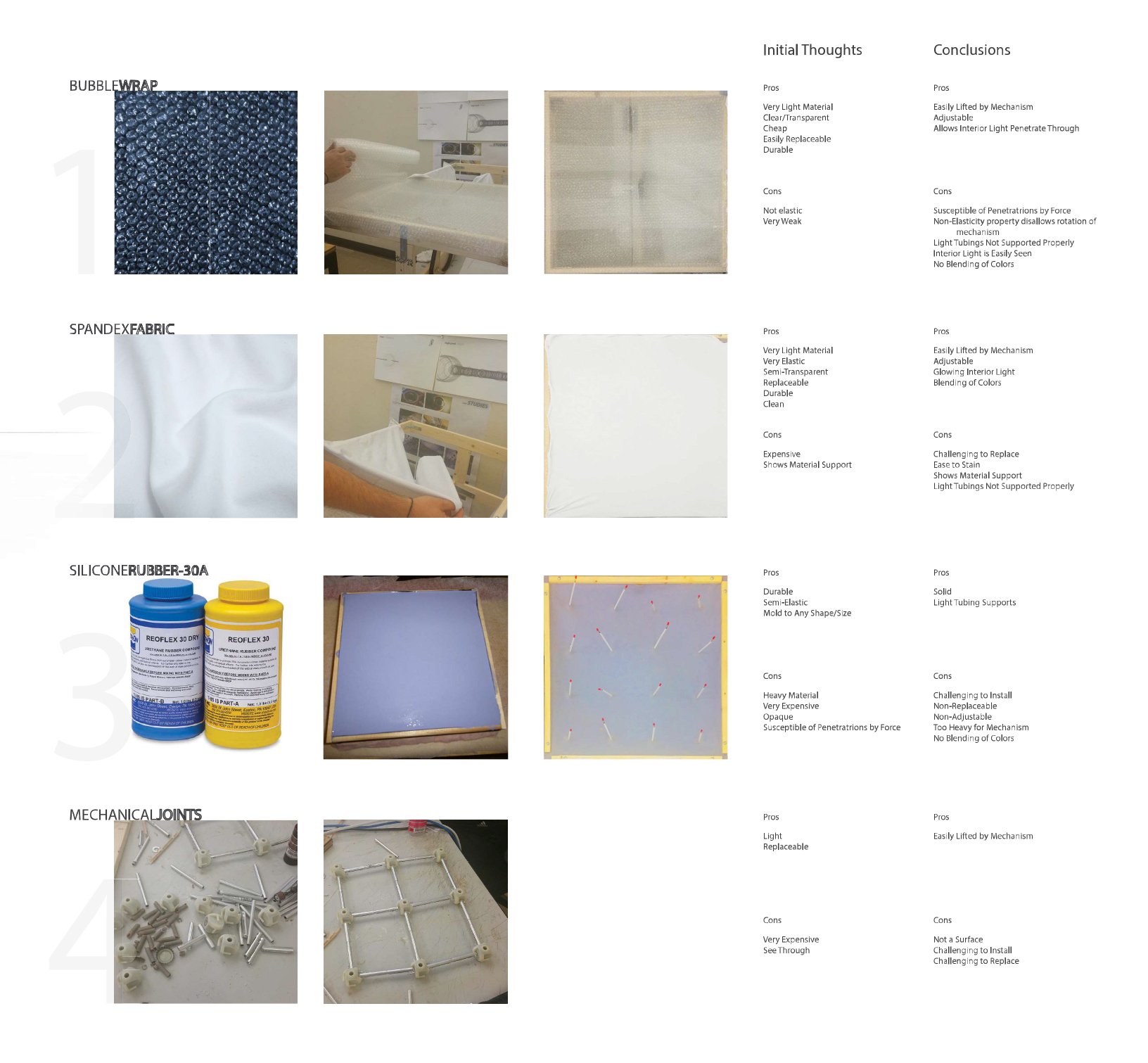

Surface Material proposal

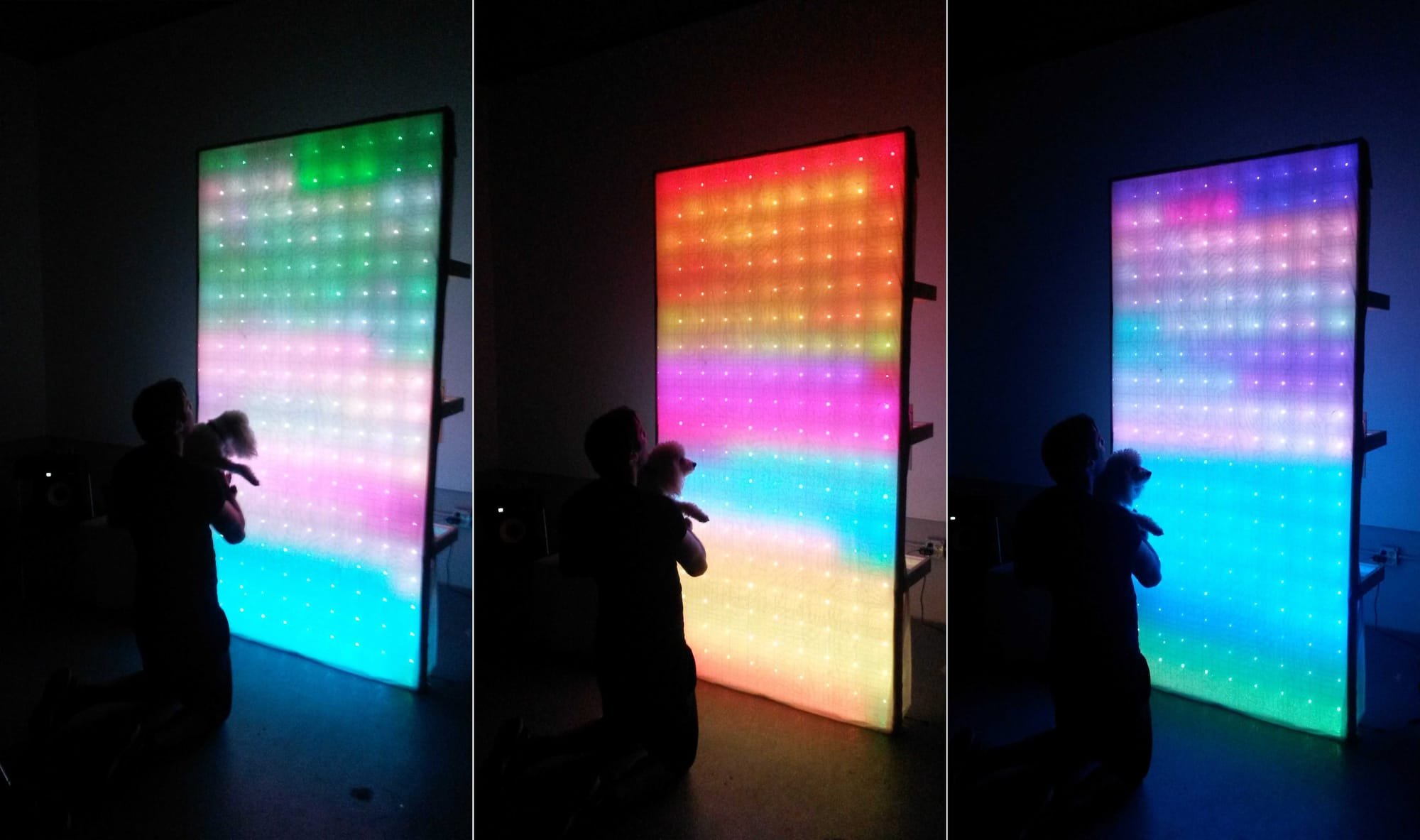

Final Project

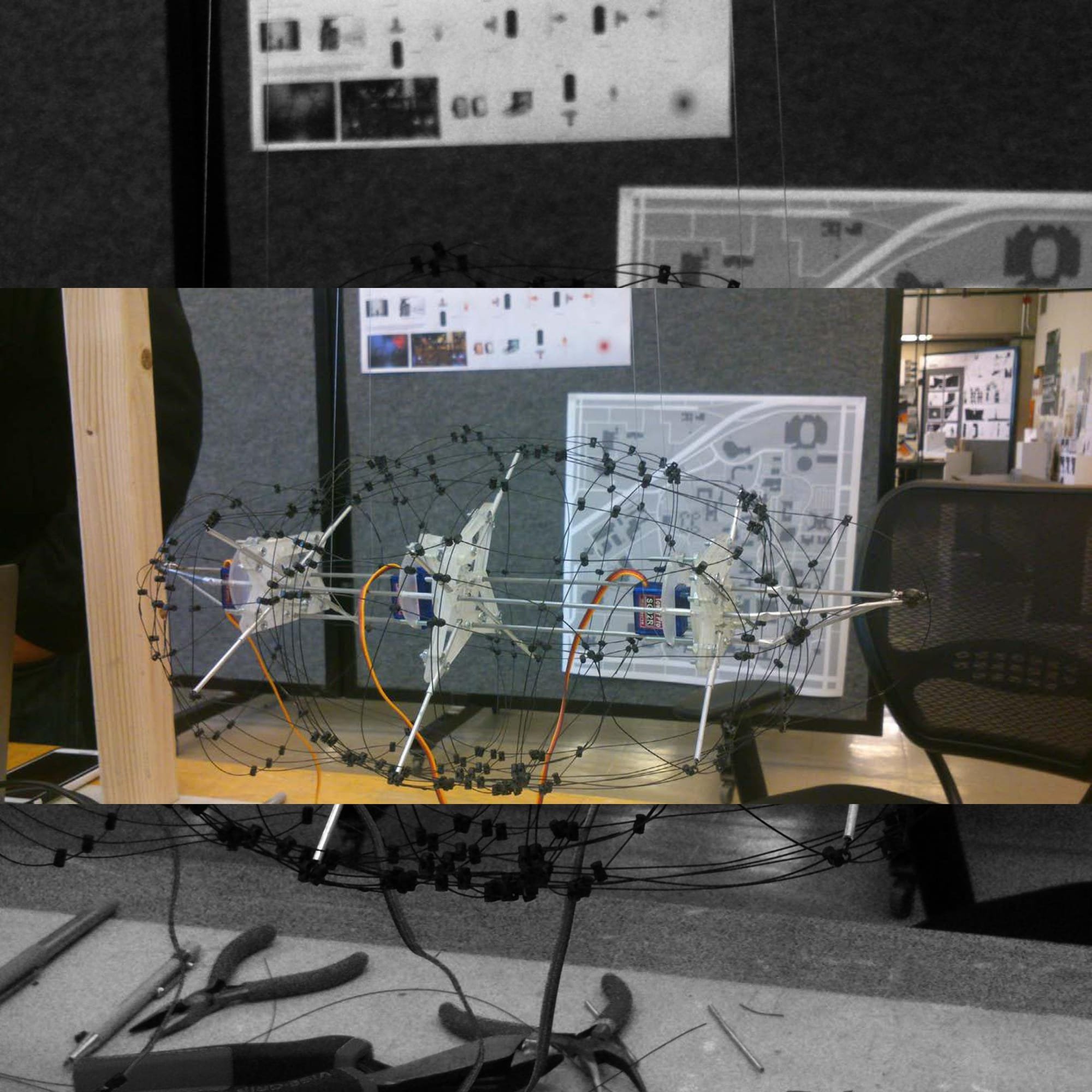

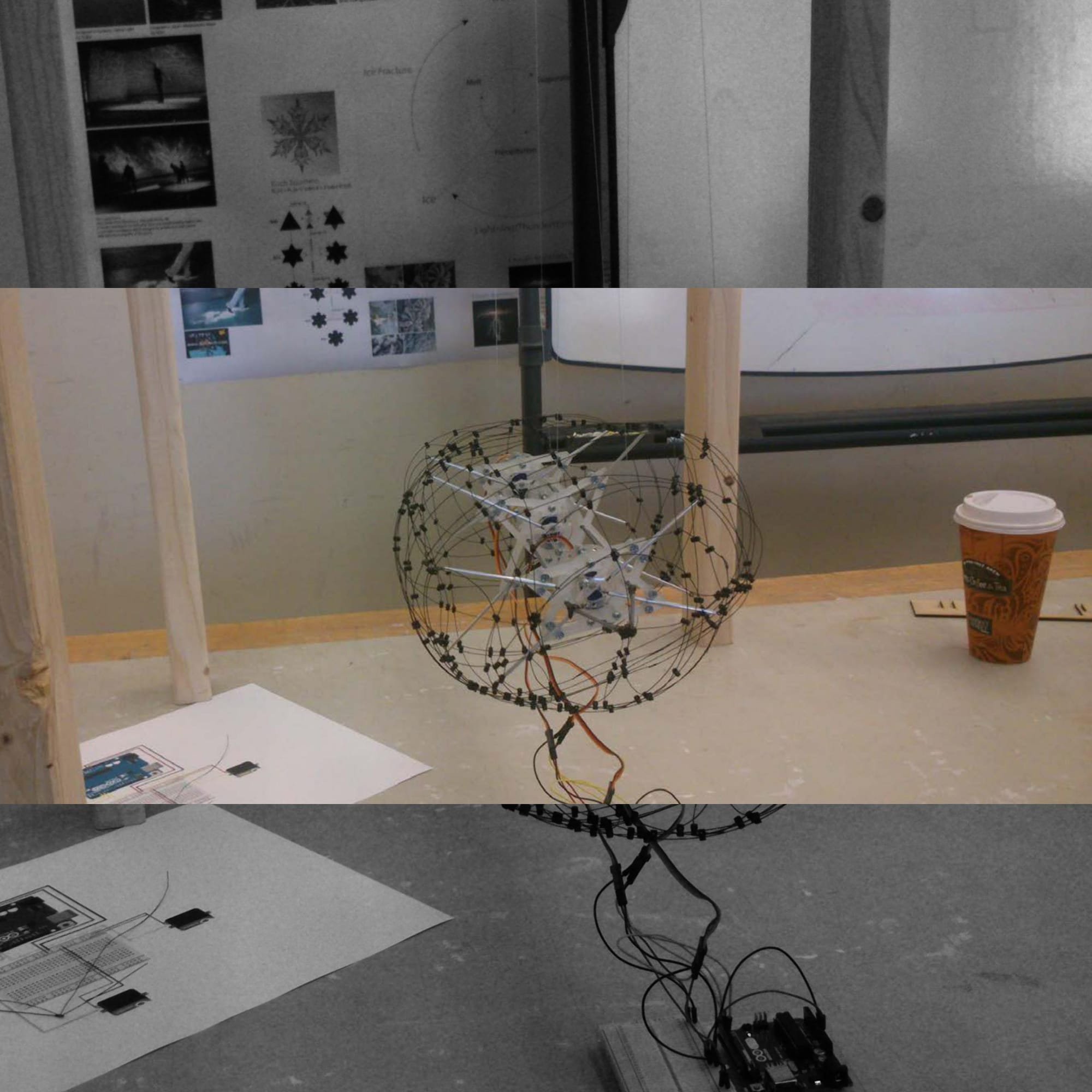

Behind the scenes

Pseudo code for the Arduino SW:

For every loop: Audio sampling interrupt Wait audio sampling to finish Remove noise and apply equalizer levels Down sample 128 spectrum to 8 values (by averaging the last 10 frames) Map the 8 values to servo movement Map the 8 values to LED lights

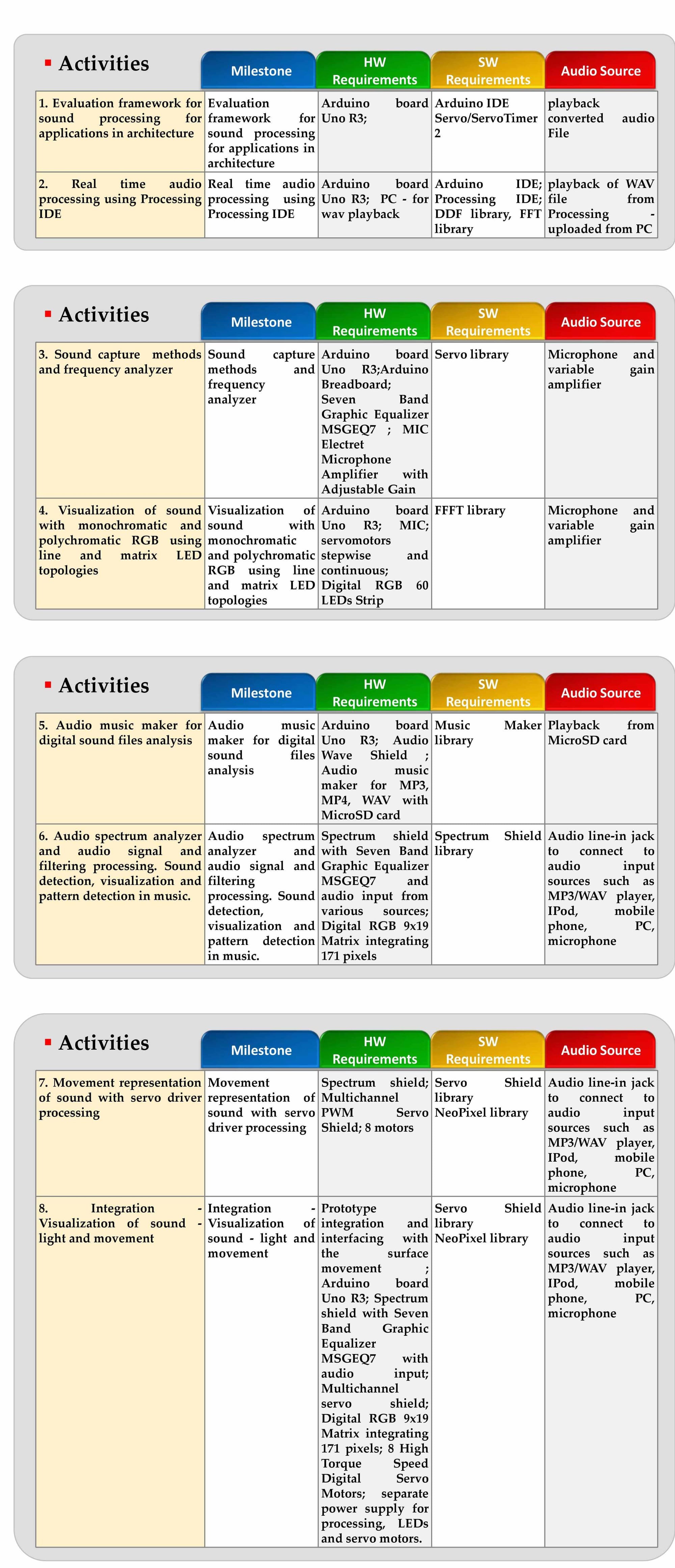

Milestones

Details of the Final Project

Real footage of the project