Paint Machine Using Facial Gesture Recognition

The main concept of this project is to have a co-creative drawing agent that collaborates with human users in real time artistic collaboration, using the user's facial gestures as input.The system is designed to stimulate the user’s creativity by introducing unexpected contributions at the right time in the creative process.

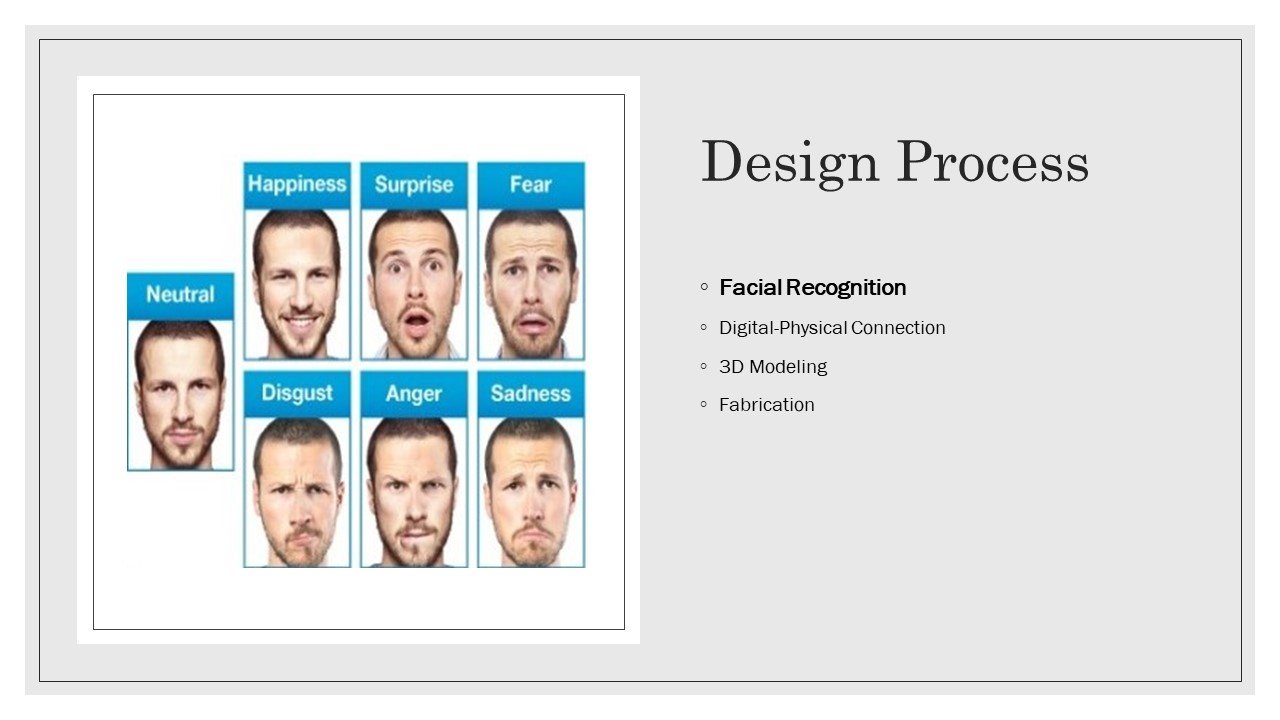

The first step for this project was solving the facial gesture recognition problem. The code was written in python, using machine learning tools (Keras) to train the computer for different facial expressions such as: 'angry', 'disgust', 'fear', 'happy', 'sad', 'surprise', 'neutral’.

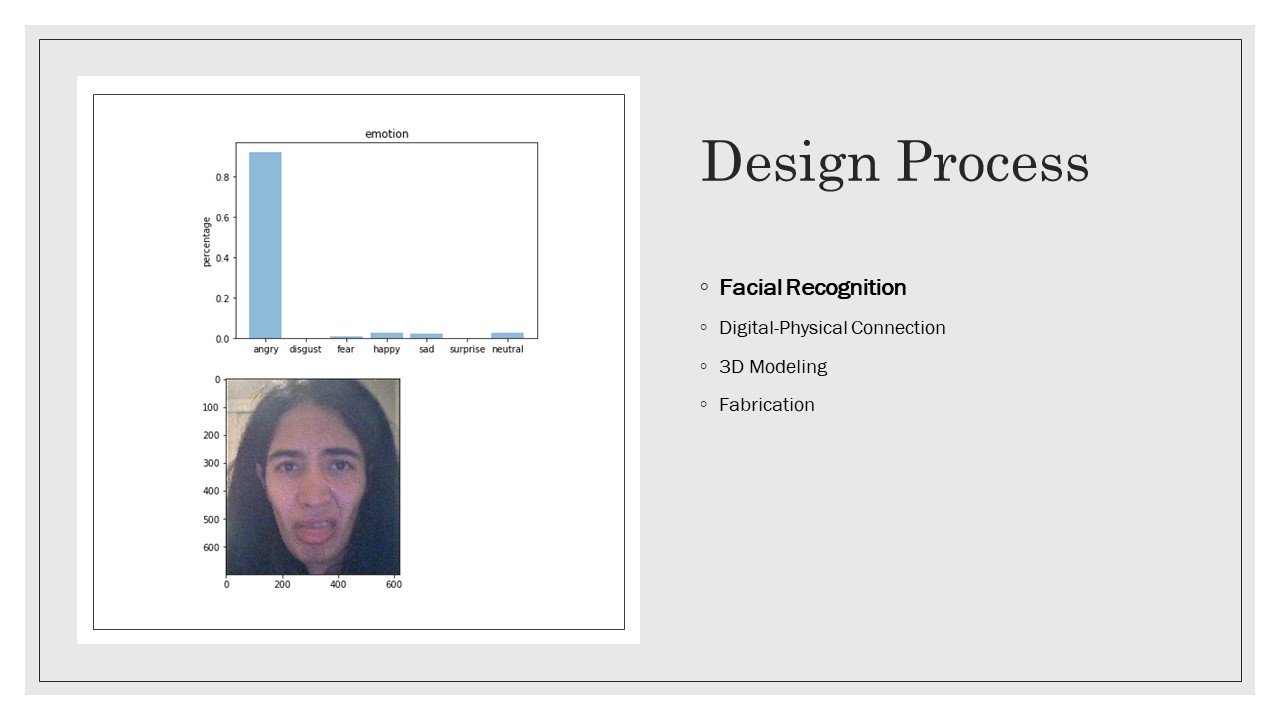

The training data-set consisted of 3588 photos(in shape of pixels and their corresponding RGB values). In addition, each photo was labeled with a facial gesture. After training a model with this data-set, I used the model to make predictions on future photos and live videos.

I realized however, some gestures (such as dear and disgust) are a lot harder to recognize. For example, in this photo I was trying to make a disgusted face but the computer labeled this gesture as angry.

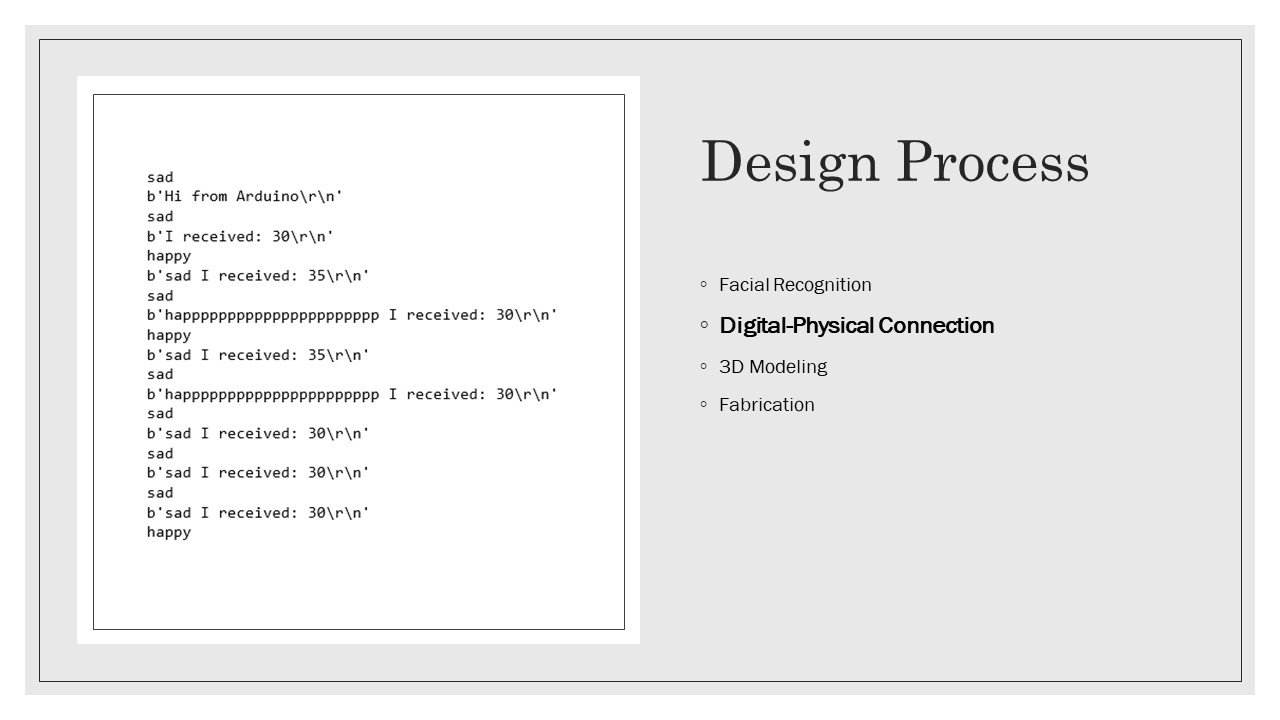

The next phase was to connect the digital and physical part of the project, this means connecting the python code to Arduino through the serial monitor.

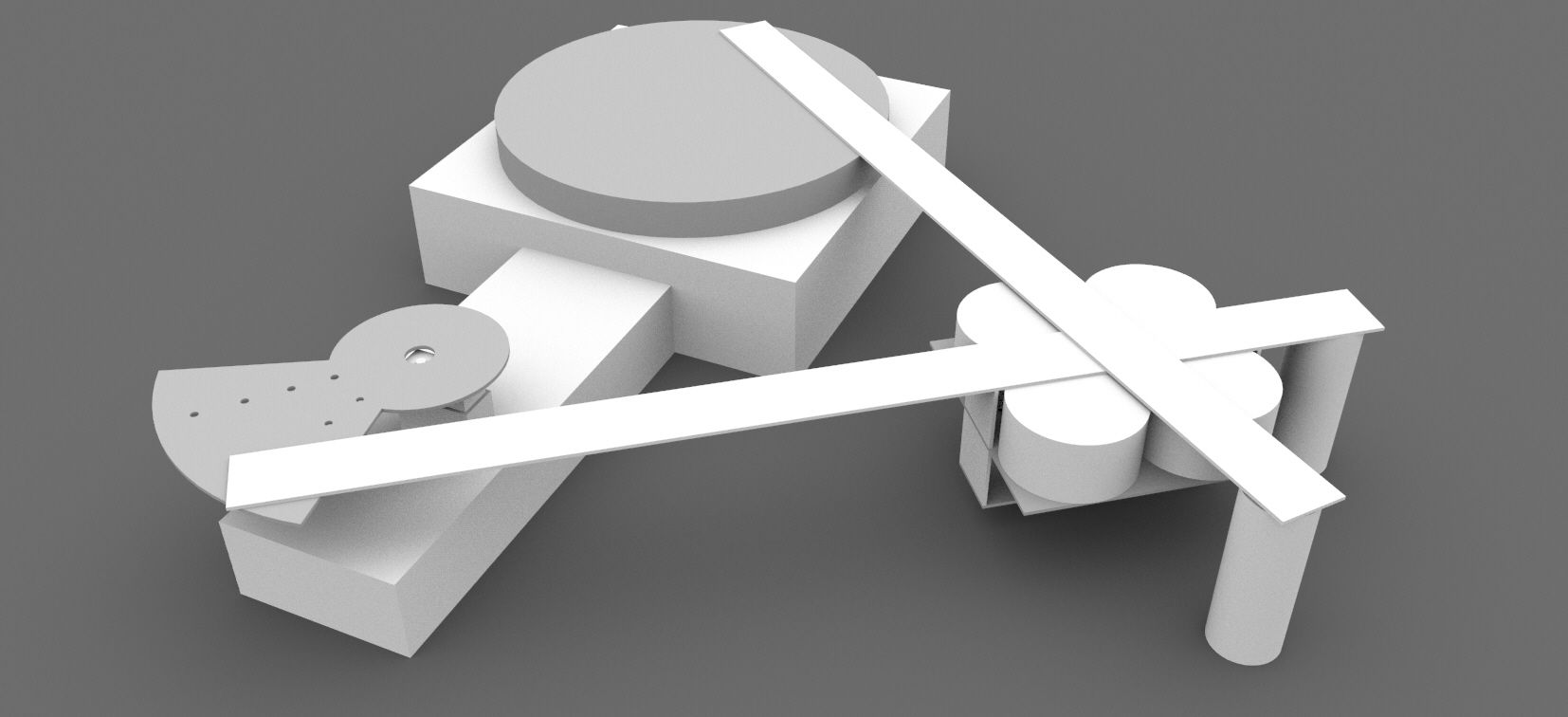

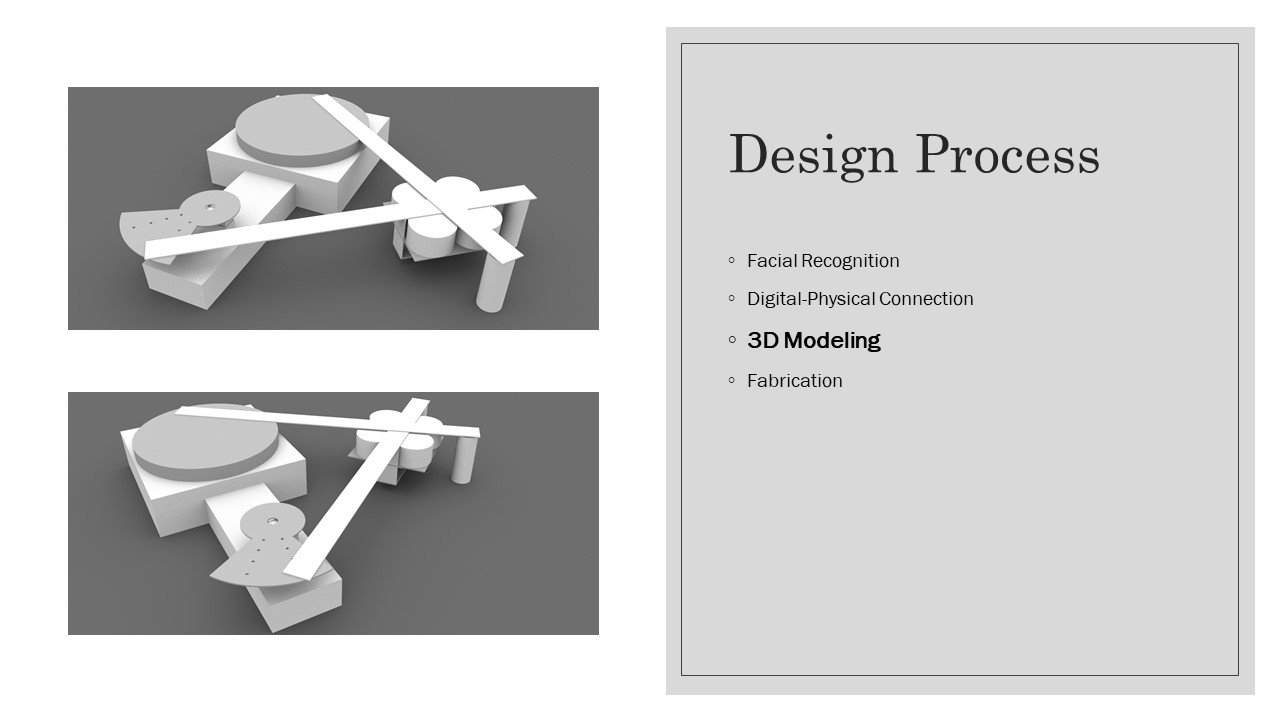

For the next step, I started by making prototypes with foam. But for a more precise version, I 3D-modeled the machine in Rhino.

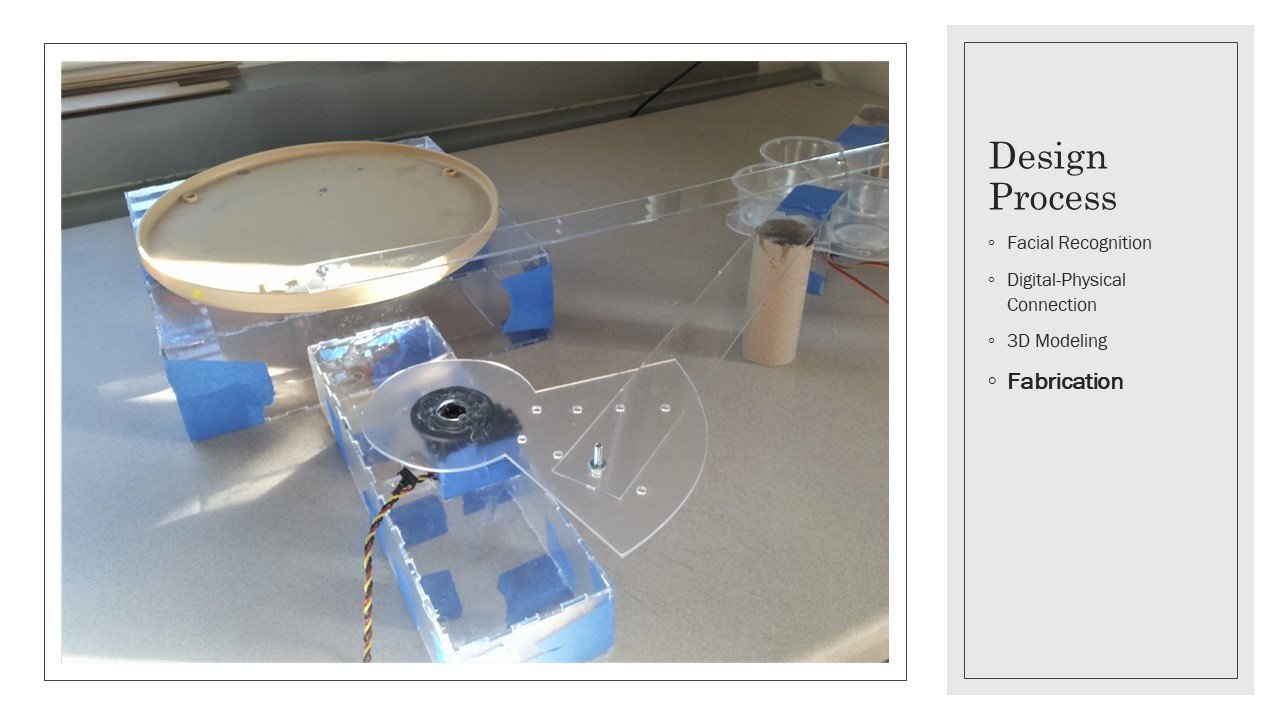

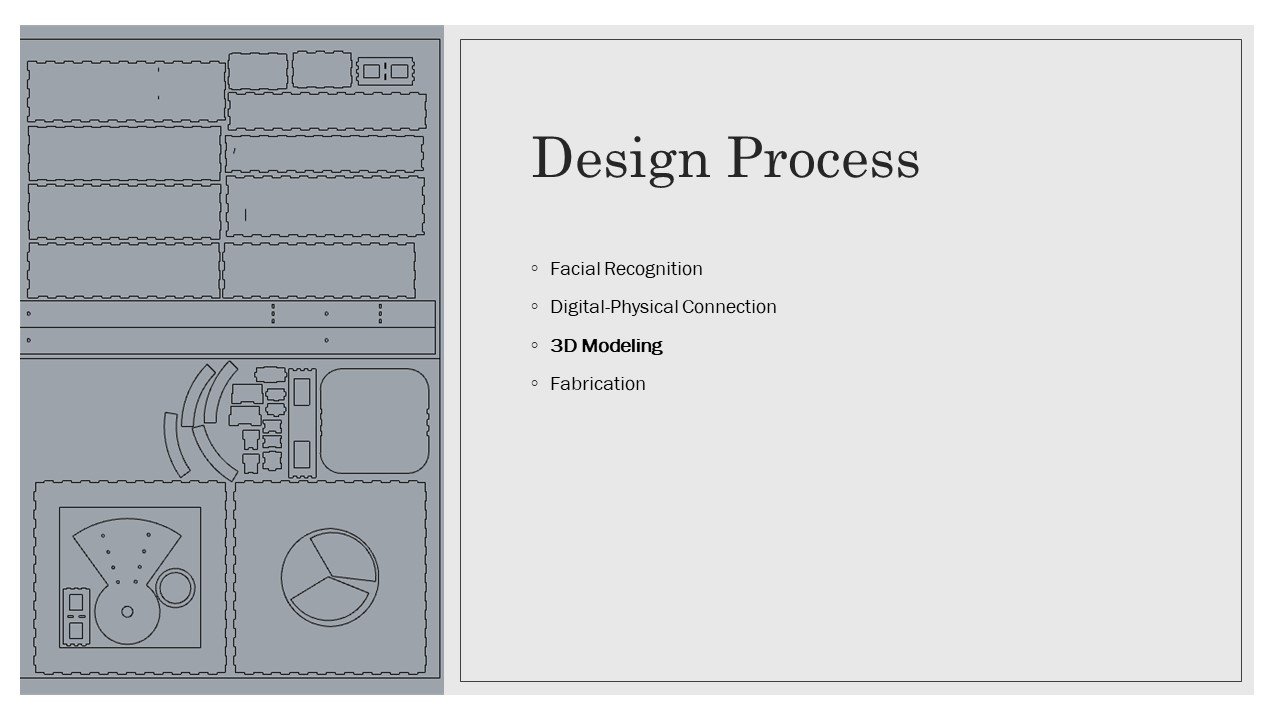

The 3D-model also provided me with the opportunity to laser-cut the pieces to the machine.

In the final step, the laser-cut pieces were assembled to produce the final prototype.